The software industry is moving fast - that fact should be crystal clear. What now is considered as the state of the art model for software development and deployment, in a few years it usually becomes business agility barrier for the most organizations. The lack of agility limits the innovation factor and response time needed for the organizations to react, correct or deliver the business values before their competitors. The era of cloud computing has brought a myriad of new choices for software delivery. Computing resources, storage and networks can be provisioned elastically and on demand. Application can be scaled in question of seconds and the most of the PaaS solutions also provide proactive application monitoring, recovery, load balancing and centralized log aggregation.

When talking about scalability, cloud centric and monolithic architectures, there are few issues and discrepancies that stand out immediately. As they are being part of a large code base, monolithic architectures can't be partitioned into independent deployable components. When scaling the monolithic based application we must scale all of it's components, and not just the ones under heavy load. Moreover, the componentes often have different functional requirements - while some might be more CPU intensive, the other might be I/O bounded. Due to the lack of this autonomous and componentization capable nature, the fault isolation can't be achieved, so the partial failures will usually cause total service downtime.

Cloud-native architectures attempt to solve the problems above by decomposing the monolithic systems into self-sufficient units called microservices. They encapsulate the minimal business capability into single responsibility artifacts under assumption of performing that single capability in the optimal fashion. I had an opportunity to assist to the Spring I/O conference in Barcelona, and almost all speakers had mentioned that ubiquitous word - microservice. They were some very prominent and interesting talks, but in the end all of them had tended to converge in an aforementioned term. Projects like Spring Boot and Spring Cloud are already bringing the Spring programming model into the world of cloud based applications.

Cloud-native architectures and microservices seem to be the logical evolution of the software engineering and delivery. The benefits of microservices include other aspects like parallelizing the workflow of development life cycle by dividing the bounded contexts between developers instead of assigning the whole team to the same sandbox, decoupling from the technology stack (for instance we could have one microservice written in Java, another one in Ruby or Python, and so on), implementing new business requirements independently from the rest of the system (in a monolithic application it's required to redeploy the entire application when one part is changed). As we will see, the migration to the cloud implies more than purely technical changes.

Moving to the cloud

As stated in Matt Stine's book Migrating to cloud-native application architectures, for the organizations to successfully adopt the cloud-native approach, apart from technical changes, cultural and organizational changes are also essentials. It's not enough to introduce the agile practices in our team, if the organization itself is stuck in a traditional process management. To establish an analogy, think about the following quote I have borrowed from Milky Way movie:

You can't focus on healing one organ and take for granted the rest of the organism.The similar approach can be applied to the organizations. Traditionally, an organization can be divided into various units called silos, each of them operating inside isolated contexts, with specific vocabularies, practices, habits and communication kinds. The system administration silo tends to keep an IT ecosystem durable and available, sticking to established SLAs and performance indicators. The changes generated by development team are not very welcome as they tend to introduce risks into the system. Therefore, as long as changes can't be introduced safely, the speed of innovation and delivery of new business values are governed by heavyweight processes that are clashing directly with cloud-native principles. The DevOps is an emerging movement which goal is to establish the collaboration lines between development and the IT operations which can lead to continuous value delivery without compromising the performance, safety, availability and speed of innovation.

The silos are dissolved and recreated into a totally new organizational structure with shared agile practices, vocabulary and communication channels. Instead of focusing on processes, we focus on persons as the foundational aspect of change and innovation.

The development team can provision the required infrastructure for the application deployment using a simple API to create virtual machine instances, storage and network topologies. That way the teams are considered as autonomous entities coexisting inside a totally decentralized ecosystem. When new members are incorporated into the team, they don't have to deal with the enormous codebase since separated bounded contexts can be used to reduce the coordination overhead and make developers productive from the beginning. The teams can cooporate independently using the REST API based collaboration model and deploy new functionality in a relaxed harmony with the rest of the teams.

Distributed patterns

The migration to the cloud-native architectures comes at the cost of complexity involved in coordinating the distributed ecosystem. As you will see some new infrastructure blocks must be build as trade off to adoption of cloud-native principles. The series of the well known distributed patterns will make ascending to the cloud less painful.

API Gateway

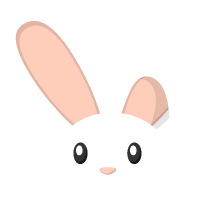

The diversity of clients that interact with the cloud based applications can vary from mobiles to desktop systems and gaming consoles. Some of the aforementioned clients run in a constrained environment where aspects like battery life or network bandwidth are much appreciated. If a client needs to query a tons of microservices in order to satisfy the UI needs, that would cause a lot of problems like latency and exaggerated number of round trips. To address this issue, the API gateway pattern provides a single entry point for the specific clients, where requests are routed to one or propagated to multiple microservices. As the request travels down the pipeline the responses are aggregated concurrently by each microservice and then returned to the client.

Service discovery / registration

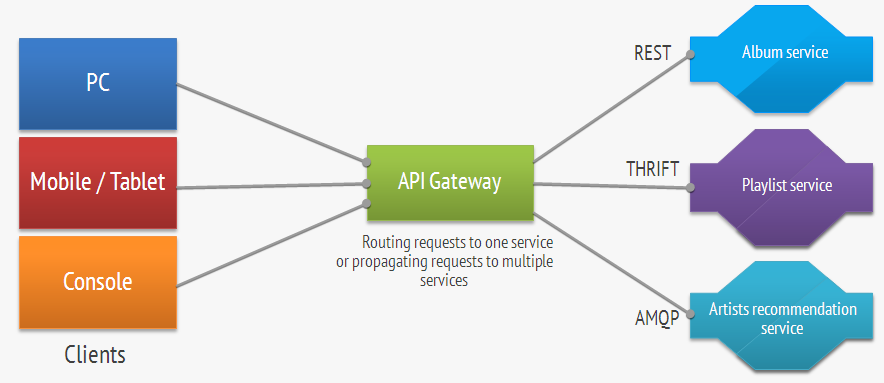

Service discovery pattern acts as a repository of microservice instances. In opposite to the traditional distributed system deployments, cloud-based environments are characterized by ephemeral resources that are created and disposed in a elastic manner. Docker and LXC have popularized containers as a new medium of application distribution inside the lightweight portable units that can be provisioned much faster then virtual machine instances. The number and locations of instances changes dynamically and every time the new microservice is deployed, the binding is performed on the random dynamic IP address and port. For the client to successfully contact the microservice, it must query the service registry which in order resolves the location of the microservice.

When microservice is deployed it emits an autodiscover request to the service registry. Service registry periodically sends a heartbeat to the microservice. If after a specified timeout the microservice doesn't respond it is automatically removed from the service registry.

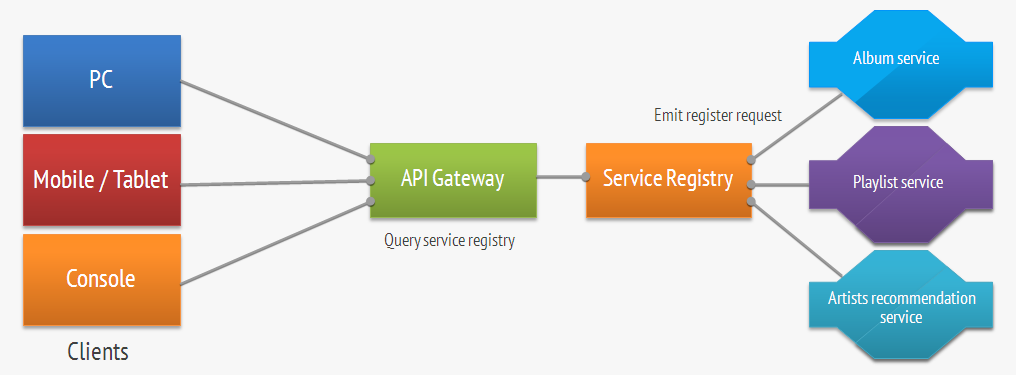

Routing / load balancing

Intelligent routing and load balancing across multiple microservices is a must in a cloud distributed environments. The router / load balancer is another component of a cloud distributed ecosystem which is responsible for querying the service registry and forwarding the requests to the appropiated microservice instances. That scenario is shown on figure 3.

Circuit breaker

In a cloud-native architectures it is essential to guarantee the fault tolerance and prevent cascading failures when one of the microservices comes out of control. As we already mentioned, packaging and partitioning the microservices into Linux containers will prevent a nasty microservice to consume an entire machine's resources or affect another container. That is a wise doze of defense at infrastructure level. But what's about the application level protection? The circuit breaker pattern is based on the premise that unhealthy microservices wouldn't have to cause the interruption of other services. Every RPC call that is being routed to an unhealthy microservice shouldn't continuously punch the service making it impossible to recover. The circuit breaker behavior can be compared to our home circuit breaker that prevents the current from damaging devices when it exceeds the certain threshold.

Similarly, the circuit breaker pattern counts the number of failed calls, and when the number is above the specified threshold, it transitions into the open state and make the call to fail immediately. Once the service has recovered, the circuit breaker is closed and the calls can be executed successfully.